April 24, 2024

Data Engineering for Cybersecurity, Part 2: Collection and Storage

.png)

In the first part of our series, we explored the challenges in today's security data landscape and the growing importance of data engineering in cybersecurity. We covered the increasing volume and complexity of security data, the limitations of traditional security approaches, and the critical role that data engineering plays in enabling organizations to effectively manage and utilize their security data. We also categorized and dissected 22 common security data sources and discussed how each may fit into an organization’s security program. I highly recommend checking out Part I if you haven’t already!

In this second installment, we'll dive deep on the critical aspects of data collection and storage, which form the foundation of any effective security data engineering strategy.

Data Collection

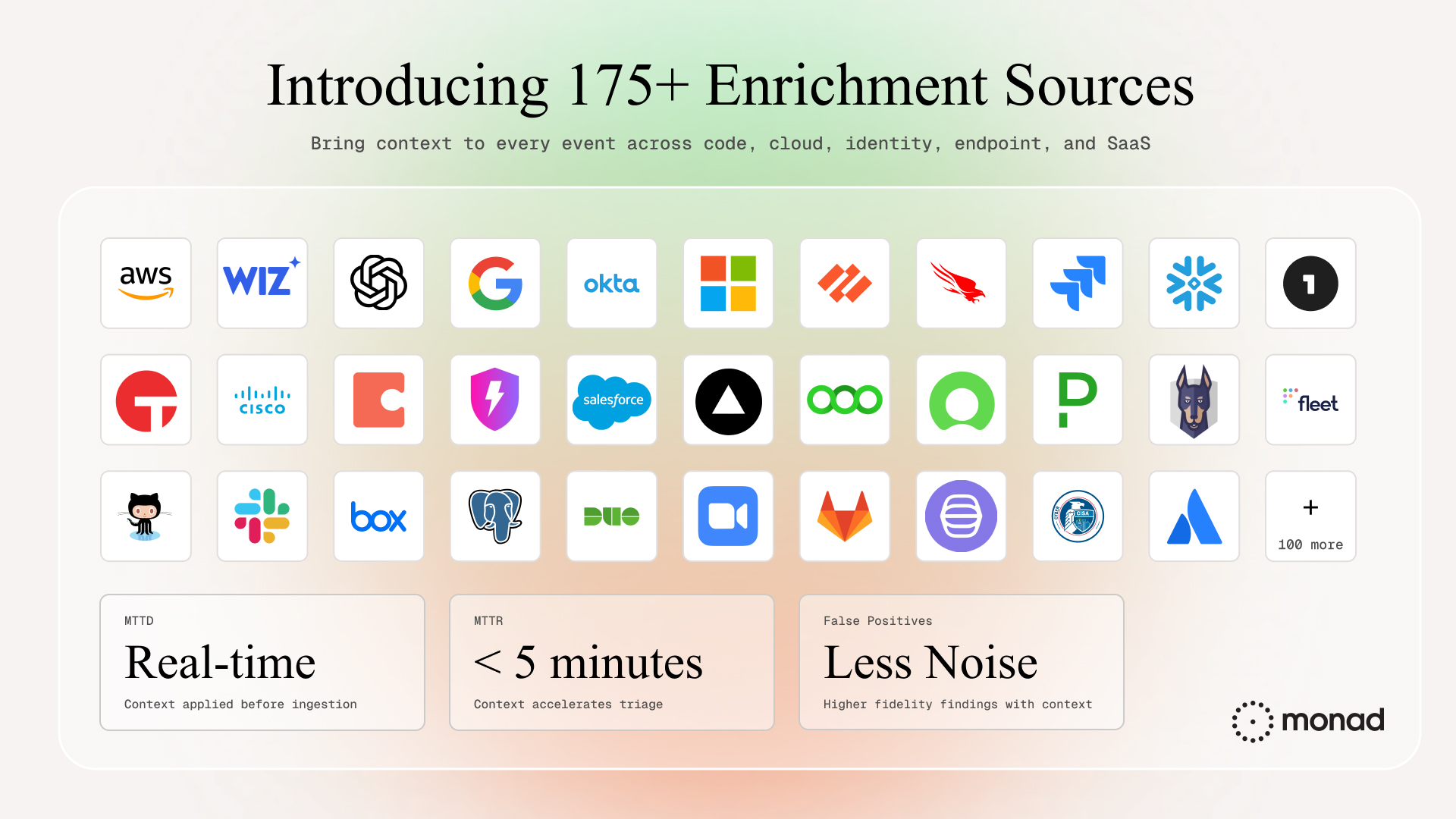

As we covered in part 1, security-relevant data comes from a wide range of sources, including IoT devices, cloud services, applications, and on-premise systems. Efficient, scalable and highly reliable data collection is paramount in ensuring that security teams have access to the information they need to detect, investigate, and respond to threats. Below are the most commonly used data collection methods:

Agent-based Collection

Agents typically collect data from various sources (Pull) and then send the data to a designated destination (Push). More details and examples below:

- Endpoint agents collect data on user activities, system events, and file changes.

- Network agents monitor traffic and detect anomalies.

- Example: osquery, an open-source agent that pulls system data and pushes it to a centralized server for analysis.

Agentless Collection

API-based collection (Pull)

Application Programming Interfaces (APIs) provide programmatic access to data from various systems and services. This data can be pulled on-demand by making HTTP requests to specific API endpoints.

There are several types of APIs commonly used for security data collection:

- Management APIs: These APIs allow direct interrogation of a service's resources and configurations. For example, using the Okta API to retrieve user information or the AWS EC2 API to describe instance details.

- Event-based APIs: These APIs provide access to stored event data generated by a service. For instance, retrieving CloudTrail logs from an S3 bucket using the AWS S3 API.

- Streaming APIs: These APIs enable real-time data collection by subscribing to data streams. Examples include Amazon Kinesis streams and Apache Kafka topics, which can be consumed using their respective APIs.

Service-based collection (Push)

Many services and applications generate logs, metrics, and events that are pushed to a designated location or log aggregation system.

Examples of service-based log collection include:

- Syslog: A widely-used logging protocol that allows devices and applications to send log messages to a central syslog server.

- Windows Event Log: A centralized repository for storing event data from the Windows operating system, applications, and services.

- Cloud service logs: AWS CloudWatch Logs, Azure Monitor, and other cloud-native logging solutions that automatically collect and store log data from various cloud services and resources.

Organizations often require a combination of agent-based and agentless methods to collect all security-relevant data, as some data sources can only be collected using one method or the other. For example, collecting system-level activity and real-time telemetry from endpoints (e.g. containers) typically requires an agent, while SaaS app logs (e.g. GitHub Audit Logs) can usually only be collected through API-based agentless methods.

When crafting a data collection strategy, it's key to consider factors such as data collection method, format variability, latency and velocity requirements, data volume, and the overall significance of each data source in the context of the organization's security goals.

Data Storage

Reliable and scalable data storage is essential for managing the vast amounts of security data generated by organizations today. Data storage solutions can be broadly categorized into on-premises and cloud-based options, each with its pros and cons.

On-premises Storage Solutions

On-premises storage solutions provide greater control over data management and security. However, they may not make sense in today's remote world and require significant upfront investments in hardware and maintenance.

- Traditional databases: SQL (e.g., MySQL, PostgreSQL) and NoSQL (e.g., MongoDB, Cassandra) databases

- Well-suited for structured security data, such as user access logs, system configurations, and asset inventories.

- Provide strong consistency, and support for complex queries.

- Example: Storing user authentication logs in a PostgreSQL database for easy querying and analysis.

- Distributed file systems: Hadoop Distributed File System (HDFS)

- Ideal for storing large volumes of unstructured or semi-structured security data, such as network packet captures, system logs, and threat intelligence feeds

- Offers high scalability, fault tolerance, and cost-effective storage for big data workloads.

- Example: Storing raw network traffic data in HDFS for long-term retention and batch processing.

Cloud Storage Solutions

Cloud storage solutions have grown in adoption over the past decade. They offer scalability, flexibility, cost-effectiveness, and can be requested on demand. They enable high-performance querying and analysis of large datasets.

- Object storage: Amazon S3, Cloudflare R2

- Suitable for storing massive amounts of unstructured security data, such as log files, forensic disk images, and malware samples

- Provides high durability, scalability, and cost-effectiveness for long-term data retention

- Example: Storing compressed web server access logs in Amazon S3 for cheap, durable storage and on-demand access

- Cloud data warehouses: Google BigQuery, Snowflake

- Optimized for storing and querying large volumes of structured and semi-structured security data, such as event logs, threat intelligence indicators, and security alerts

- Offers fast query performance, automatic scaling, and built-in security features

- Example: Loading normalized security event data into BigQuery for real-time analysis and reporting

- Data lakes: Azure Data Lake Storage, Databricks Delta Lake

- Designed to store vast amounts of raw, unstructured, and semi-structured security data in its native format.

- Provides a centralized repository for data ingestion, exploration, and analytics

- Example: Ingesting diverse security datasets (e.g., network flows, endpoint logs, threat intelligence) into a data lake for holistic analysis and threat hunting.

Hybrid and Multi-cloud Storage Approaches

While less common, hybrid and multi-cloud storage approaches allow organizations to leverage the benefits of cloud storage while maintaining control over sensitive data on-premises. Some organizations have unique challenges requiring a multi-tiered approach.

- Hybrid storage: AWS Outposts

- Combines on-premises infrastructure with cloud services, enabling organizations to store sensitive security data locally while leveraging cloud scalability and services.

- Ideal for scenarios with strict data residency requirements or low-latency data processing needs.

- Example: Storing security event data from critical on-premises systems in AWS Outposts for local processing and analysis.

- Multi-cloud storage: Pure Storage

- Enables organizations to distribute their security data storage across multiple cloud providers, avoiding vendor lock-in and improving data resilience.

- Provides a consistent data management and security layer across different cloud environments.

- Example: Storing security logs and configurations in a multi-cloud setup using Anthos for centralized management and policy enforcement

Side note: While SIEMs are crucial for security operations, they are not designed for long-term data storage. Due to the high costs associated with SIEM storage, organizations typically retain data in SIEMs for only a short period (e.g., 90 days) before migrating it to more cost-effective storage solutions like Amazon S3. This approach allows for efficient security analytics while optimizing storage costs. Stay tuned for blog posts 4 and 6, where we'll dive deeper into security analytics and the evolution of SIEMs.

Data Temperature and Retention

Data temperature refers to how frequently data is accessed and how quickly it needs to be available. Considering data temperature when choosing a storage solution is crucial for optimizing cost and performance. Cloud providers offer different storage tiers, each with its own characteristics and pricing models. While we’ll use Amazon S3 as the example, most cloud providers have nearly identical offerings.

Hot storage

Hot data is frequently accessed and requires fast retrieval. In the cloud, hot data is typically stored in object storage services like Amazon S3 Standard. Hot storage provides low latency and high throughput, making it ideal for log aggregation or building a security event data lake.

Sending high-volume data sources, such as VPC Flow Logs, directly to a SIEM can become very expensive, especially in high transaction environments. Instead, ingesting this data into a hot storage bucket can significantly reduce costs while still enabling near real-time access for SIEMs and analytics tools such as QueryAI or Amazon Athena.

Warm storage

Warm data is accessed occasionally and can tolerate slightly slower retrieval times. In the cloud, warm data can be stored in object storage services like Amazon S3 Standard-IA. These storage classes offer lower storage costs but with slightly higher retrieval costs and latency. Warm storage is suitable for threat hunting, periodic security audits, or forensic investigations.

Cold storage

Cold data is rarely accessed and can be stored on slower, less expensive storage services like Amazon S3 Glacier. These options provide the lowest storage costs but with higher retrieval latency and costs. Cold storage is ideal for long-term retention of historical security data required for compliance or regulatory purposes.

Retention requirements vary depending on the industry and regulations. For example, requirement 10.5.1 in PCI DSS v4 requires organizations to retain audit trail history for at least one year, with three months immediately available for analysis.

When selecting a data storage solution, consider factors such as data volume, growth, retention requirements, query performance, integration with analytics tools, cost implications, and access times for archived data. While cold storage can be cost-efficient, slow retrieval speeds can hinder incident response and investigations. However, emerging security data ETL solutions enable rapid access to archived data and seamless integration with SIEMs, allowing organizations to store historical security data cost-effectively while still being able to quickly retrieve and analyze it when needed.

Best Practices

Developing a strategy and making decisions for collecting and storing security data is no walk in the park. It requires close collaboration with different teams, deep consideration of collection and storage factors, a deep understanding of the significance and sensitivity of various data types, a clear grasp of compliance requirements, and the knowledge of how to securely configure storage assets and manage access to them. Below are a few tips to consider on this journey:

Collection and Storage

- Implement a tiered storage approach to optimize cost and performance.

- Leverage cloud storage solutions for cost-effective and scalable log storage, especially for high-volume "noisy" logs.

- Avoid using SIEMs for long-term log storage due to high costs; leverage them for real-time analysis and alerting.

- Compress and encrypt logs during storage to save space and enhance security.

- Establish redundancy and failover mechanisms.

- Automate log collection, parsing, and storage processes for efficiency and consistency. More on this in Part 3!

Data Security, Privacy, and Compliance

- Encrypt sensitive data at rest and in transit using strong encryption algorithms.

- Implement multi-factor authentication for access to critical security data.

- Enable detailed audit logging to track all access and modifications to security data.

- Regularly review and monitor access logs for suspicious activities.

- Comply with relevant privacy regulations (e.g., GDPR, CCPA) and industry standards (e.g., PCI-DSS, HIPAA).

- Conduct regular security assessments and penetration testing of data storage systems.

- Regularly validate log data integrity and completeness through automated checks or sampling.

Data Governance

- Define clear data ownership and responsibilities.

- Establish granular access controls based on the principle of least privilege.

- Develop and enforce data retention policies in line with legal and regulatory requirements.

- Implement strict data handling and classification procedures.

Key Takeaways

- Perform an inventory of which security data sources are available to your team and what the appropriate data collection methods (e.g. agent-based or agentless) are for those sources.

- Select data storage solutions that align with your organization's specific needs, considering factors such as data volume growth over time, retention requirements, query performance, and cost.

- Optimize storage costs and retrieval times by leveraging different storage tiers (hot, warm, cold) based on data temperature and access frequency.

- SIEMs are not long-term storage solutions.

- Prioritize data security, privacy and compliance when making data collection and storage decisions.

- Establish a robust data governance framework that defines data ownership, access controls, retention policies, and handling procedures.

- Foster collaboration among security, data engineering, and compliance teams to ensure a holistic and effective approach to security data collection and storage.

Conclusion

Effective data collection and storage are the cornerstones of a successful security program. By understanding the various methods, options, and considerations involved in security data collection and storage, organizations can build a strong foundation for their security program.

This foundation enables security teams to efficiently collect, store, and analyze the data they need to detect, investigate, and respond to threats, while also supporting compliance, reporting, and other key security initiatives.

In part three of our series, we'll explore the critical aspects of data processing, transformation, and integration, and why they are a necessary evil in unlocking the full potential of security data.

Stay Tuned!

Stay ahead of emerging security challenges with our innovative approach to security data ETL. Subscribe now for our monthly newsletter, sharing valuable insights on building a world-class, data-driven security program and to be notified when our early access program launches!

Related content

Darwin Salazar

|

October 30, 2025

.png)

.jpeg)