June 20, 2024

Data Engineering for Cybersecurity, Part 3: Data Processing

Introduction

Welcome back to the Data Engineering for Cybersecurity blog series! In Part 1, we explored the fundamentals of security data, different data types, and their use cases. Part 2 focused on the importance of developing a robust data collection and storage strategy, best practices for doing so and how it fits into the broader security picture.

In this third installment, we will explore common data processing functions such as normalization, standardization, and parsing, why they are needed, and how they enable more effective critical security functions such as security operations (SecOps) and vulnerability management.

Importance of Data Processing

Data quality is the foundation of an effective SecOps program. Inconsistencies, missing context, or misalignment across data sources create blind spots that allow attacks to go unnoticed, often for months on end, leading to data breaches. The variety of data formats, volumes, and sources requires significant manual effort to wrangle and unify, including building and maintaining data pipelines, parsers, and transformation scripts. Getting data into a ready state for analysis often consumes a huge portion of a security team's time.

This overhead often leads teams to prioritize only their most crucial data sources, or worse, only the sources which are easy to obtain, leading to the loss of valuable context from other sources that could be vital for detection and incident response or understanding the organization's overall security posture.

Implementing a robust strategy and infrastructure for data processing is essential for maturing SecOps. To do this successfully, security teams need a deep understanding of data engineering practices and tools, such as parsing, normalization, standardization, and an intimate knowledge of each source's fields and insights they bring, as well as how they correlate with signals from other security tools.

Well-designed data processing pipelines enable security teams to convert raw, heterogeneous data from multiple sources into a clean, consistent, and actionable format. This allows them to build high-fidelity detections, investigate incidents effectively, and gain a clear understanding of their organization's true security risk posture.

In this post, we'll explore the most common forms of data processing in security, provide examples, and demonstrate its importance. We’ll focus more on the “why”, while in Part 4 we'll focus on data pipelines and ultimately, “how” data processing and transformation can be achieved.

Addressing Data Quality Challenges

To highlight just how much inconsistency there is across data sources, let’s take a look at something as simple as how emails are displayed across arguably the three most popular identity data sources: Okta, Google Workspaces (GWS) and Microsoft Entra ID (previously Azure Active Directory).

As you can see, each solution has a different field name for something as simple as email addresses. Suppose a security team wants to create a detection rule to 1) identify any user who has attempted to log in from a new location and 2) has failed more than 3 attempts. Before any data processing, they might write a query like this:

SELECT

CASE

WHEN email IS NOT NULL THEN email

WHEN emails IS NOT NULL THEN emails

WHEN mail IS NOT NULL THEN mail

END AS user_email,

COUNT(*) AS failed_attempts

FROM login_events

WHERE status = 'failed' AND location NOT IN (

SELECT location

FROM successful_logins

WHERE

(login_events.email = successful_logins.email OR

login_events.emails = successful_logins.emails OR

login_events.mail = successful_logins.mail)

)

GROUP BY

CASE

WHEN email IS NOT NULL THEN email

WHEN emails IS NOT NULL THEN emails

WHEN mail IS NOT NULL THEN mail

END

HAVING COUNT(*) > 3;Disclaimer: Please note that the field names 'login_events' and 'successful_logins' also vary across the data sources used in this example. For simplicity's sake, we have standardized these field names to 'login_events' and 'successful_logins' to illustrate the concept. In practice, you will need to adjust the field names according to the specific schema used by each data source.

This query uses a ‘CASE’ statement in the ‘SELECT’ clause to standardize the email field (email, emails, or mail) as ‘user_email’. The ‘WHERE’ clause checks if any email-related fields in ‘login_events’ match those in ‘successful_logins’. This approach accounts for different email field names used by Okta, GWS, and Microsoft Entra ID, ensuring correct correlation of failed login attempts with successful logins.

While this query manually addresses inconsistent field names, it highlights the complexity and potential performance issues of handling varied data structures in cybersecurity. As data sources and field variations increase, the query becomes harder to maintain and impacts performance leading to higher compute costs and crippling blind spots.

The average large enterprise security team has over 76 security tools to handle, each generating different data formats and structures. Often these data sources have poor documentation about their schemas and output formats leading to security teams spending time figuring out the data structure and field meanings, making it harder to quickly and accurately process the data.

Now imagine having to do this manual normalization and deciphering of output schema across a wide number of data sources. It’s simply unsustainable yet, it’s the reality that many teams face today.

In an ideal scenario, the security team would implement data standardization as part of their data processing pipeline, so that the detection query can be written using a single, consistent field name for email addresses, across any data source that includes a field for email addresses. The query would look like this:

SELECT email, COUNT(*) AS failed_attempts

FROM login_events

WHERE status = 'failed' AND location NOT IN (

SELECT location

FROM successful_logins

WHERE email = login_events.email

)

GROUP BY email

HAVING COUNT(*) > 3;The above example highlights why standardization is a key function of data engineering. Other critical data processing functions for security logs and other data types are parsing and normalization.

Parsing, Normalization, and Standardization

Parsing is the process of analyzing raw, unstructured data to extract the meaningful components. For example, when analyzing firewall logs, parsing helps isolate critical data points like blocked IP addresses and the times at which these blocks occurred. Extracting these data points from unstructured data sets paves the way to identifying patterns, anomalies and correlating with signals from other sources.

Usually, high-volume data sources like EDR logs or any log type having to do with network traffic are great candidates for parsing because most of the actionable insights derive from identifying specific events and patterns that are often hidden in large, unstructured data streams. Efficient parsing makes it so much easier to extract and format the data that you need.

Log parsing is often done using Regular Expressions (RegEx), Grok, and shell scripting and can be done directly within a data pipeline. Many SIEMs have parsers that are based on the technologies mentioned above. For example, Elastic’s Logstash uses Grok to enable parsing functions. Below is an example of what parsing typically looks like:

Raw Data: 2024-06-10 12:34:56,INFO,User login,steven@celtics.com,IP: 192.168.1.1

Parsed Data

{

"timestamp": "2024-06-10 12:34:56",

"level": "INFO",

"message": "User login",

"email": "steven@celtics.com",

"IP": "192.168.1.1"

}Normalization involves organizing data to remove any unstructured or redundant elements. The primary goal here is to achieve a consistent and standardized data format across the scope in question.

For example, suppose you have vulnerability scan reports from different tools (e.g., Nessus, Qualys, OpenVAS). Each tool might represent vulnerabilities differently, with various fields and structures. Normalizing this data would involve transforming all the vulnerability data into a consistent format with standardized fields such as ‘vulnerability_id’, ‘severity’, ‘description’, and ‘affected_system’. OCSF, which we’ll talk about more in a bit, does a great job of normalizing supported vulnerability finding data sources under their schema which can be found here.

This process might involve eliminating redundant data (e.g., duplicate vulnerability entries) and ensuring all entries conform to a unified schema. This makes it easier to aggregate, analyze, and prioritize vulnerabilities across different tools, making the overall vulnerability management process more streamlined and reducing the risk of missing critical vulnerabilities due to inconsistent data formats.

Standardization is the process of converting data to a common format or set of conventions. Standardization is what enables the integration of data from diverse sources.

For example, suppose you have log entries from different security tools that use different date and time formats. One tool might use ‘MM/DD/YYYY HH:MM:SS’, another might use

‘YYYY-MM-DDTHH:MM:SSZ’, and another might use a completely different format. Standardizing these log entries involves converting all date and time formats to a single, common format, such as ‘YYYY-MM-DD HH:MM:SS’.

This allows logs from all your tools to be accurately correlated based on timestamps which makes it easier to perform investigations, threat hunts, and incident response. By ensuring that all timestamps follow the same convention, standardization eliminates inconsistencies that could introduce gaps or blindspots.

Can’t We All Just Get Along?

This is not a new challenge. People have been wrangling data source inconsistencies for decades, yet we’ve still not been able to align on a unified data schema for all security data sources.

While there are some tools that output in the same schema (i.e., JSON) and support the same formats (i.e., CEF), there is still much variance even in how certain key fields are displayed. This is what we saw in our Okta, GWS, and Entra ID example. Though all three data sources output in JSON format, they label the “email” field differently which is what introduces the need for data processing.

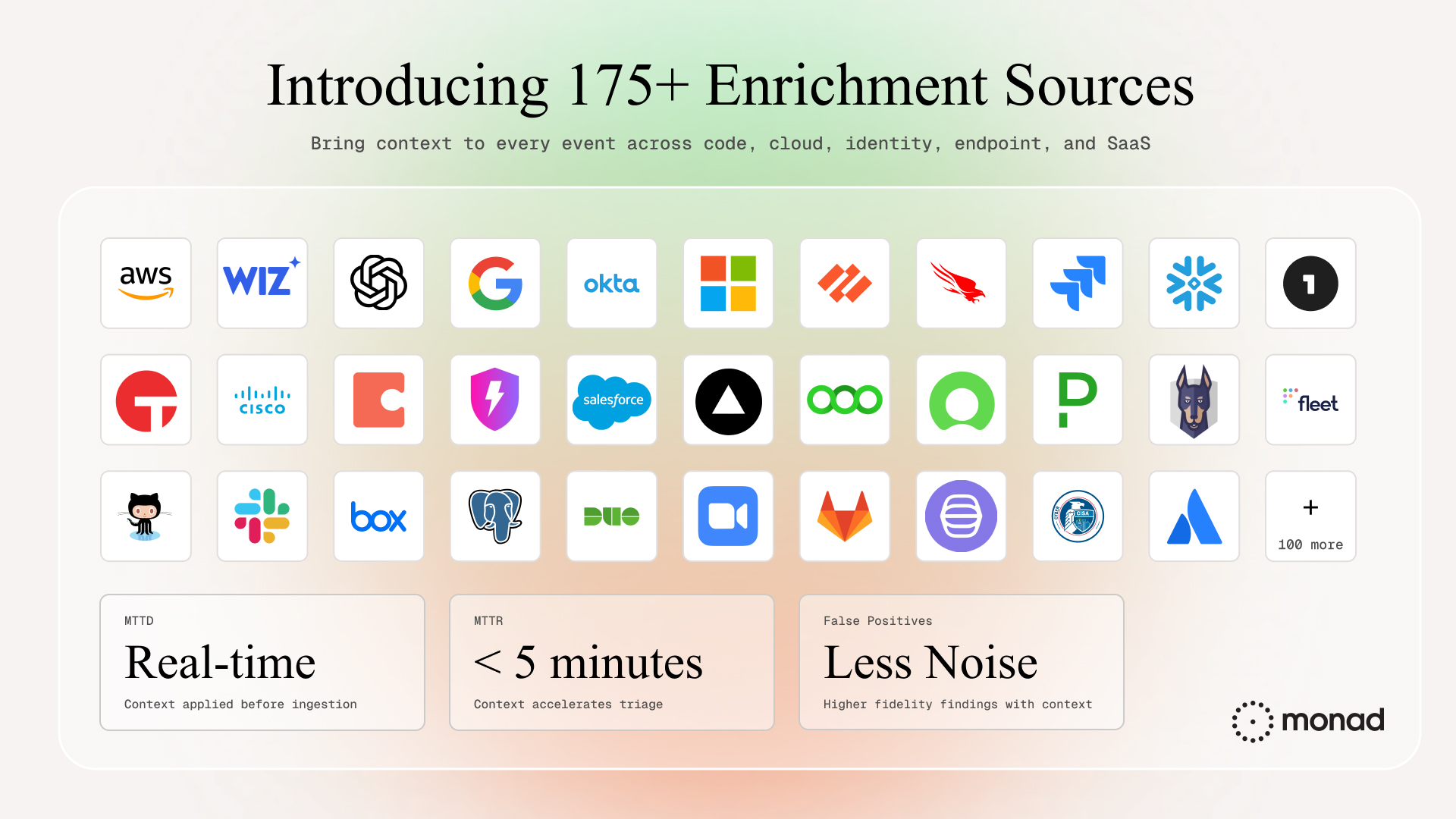

Not all hope is lost however. Aside from adopting the data processing techniques highlighted in this and the following post, security teams can also leverage the Open Cybersecurity Schema Framework (OCSF) which is a vendor-agnostic, open-source initiative aimed at standardizing the format of security data across different tools. The project was launched in 2022 and led by AWS and Splunk. Today, over 150 security vendors contribute to and support the project including Monad, Wiz, CrowdStrike, and Snowflake.

OCSF does a great job of standardizing the most atomic units, field names, across data sources for a given domain. For example, all fields containing a compliance-related finding get standardized under the field name “compliance” which then includes the compliance requirements in violation. This is helpful for streamlining compliance efforts that rely on multiple sources. Today, OCSF v1.2.0 supports 59 event and finding types across 6 categories and is compatible with just about any output destination including SIEMs, data warehouses and cloud storage.

Another notable effort is the Elastic Common Schema (ECS), which provides a consistent way to structure and organize data within the ELK stack ecosystem.

ECS and OCSF are steps in the right direction, and it's encouraging to see the significant traction and adoption of OCSF in just two years. However, not all event and finding types are supported, nor are all security data sources covered. Therefore, the need for security teams to focus on data engineering remains crucial.

Conclusion

Data processing has been at the core of SecOps for decades and yet, we’re still fighting an uphill battle to address the challenges of inconsistent data formats and the significant manual effort required to unify these diverse data sources. Data parsing, normalization, and standardization are crucial for accurate security analysis, data correlation, efficient investigations, and threat detection.

Adopting industry frameworks like OCSF and training your security teams to be more data savvy can help bridge the gap presented by the inconsistencies across security data sources. Hopefully this post did a great job of highlighting the problem and how your security team can begin solving it.

In our next post, Part 4 of this series, we will dive into data pipelines, streaming v. batching, and the tactical aspects of data processing and transformation!

Stay Tuned!

Stay ahead of emerging security challenges with our innovative approach to security data ETL. Subscribe now for our monthly newsletter, sharing valuable insights on building a world-class, data-driven security program and to be notified when our early access program launches!

Related content

Darwin Salazar

|

October 30, 2025

.png)

.jpeg)